A Practical View on Tunable Sparse Network Coding

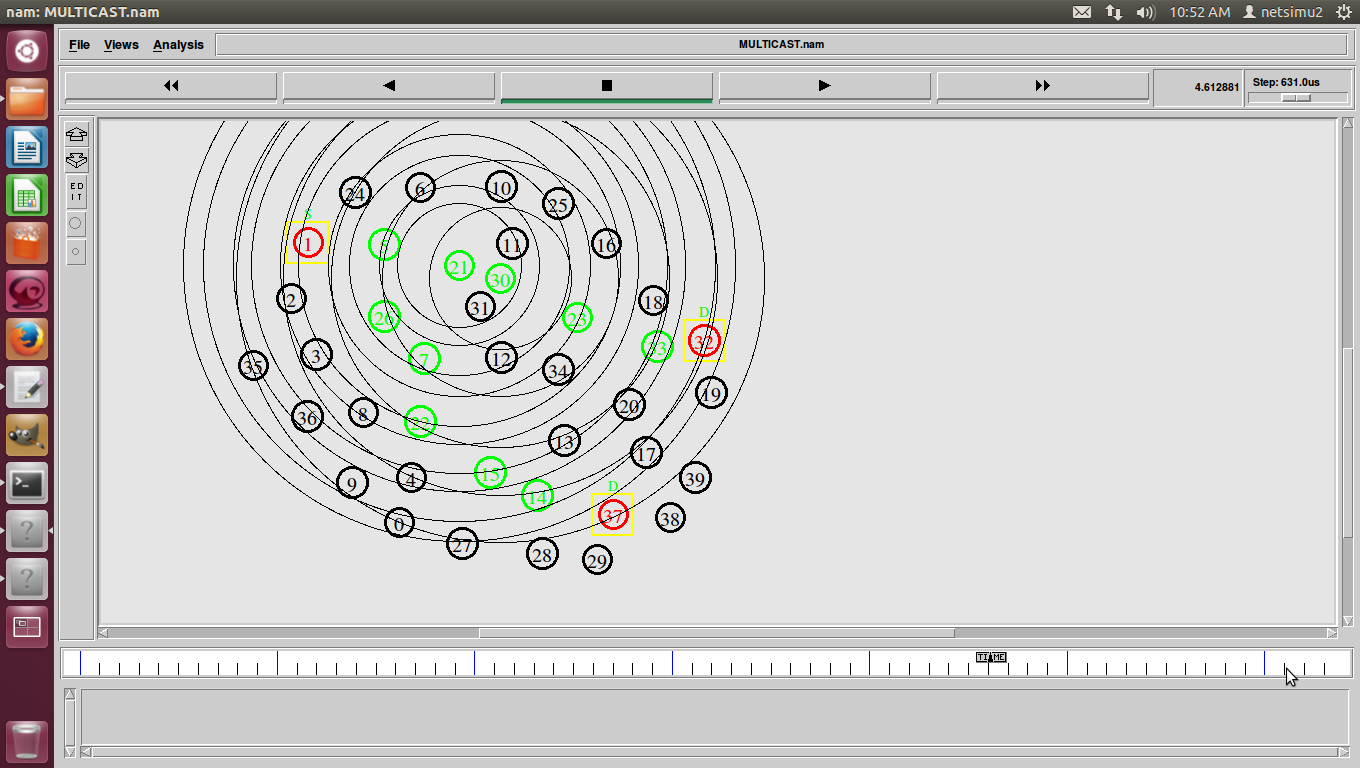

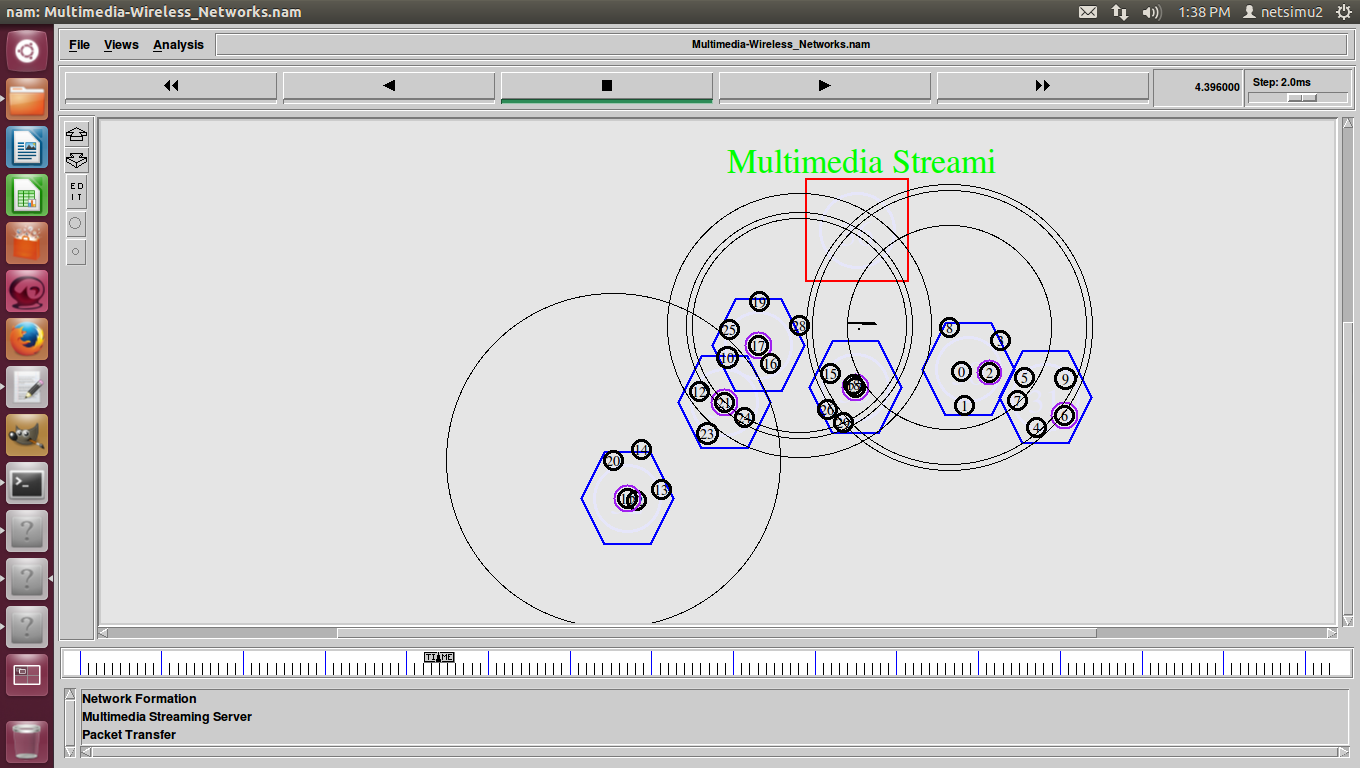

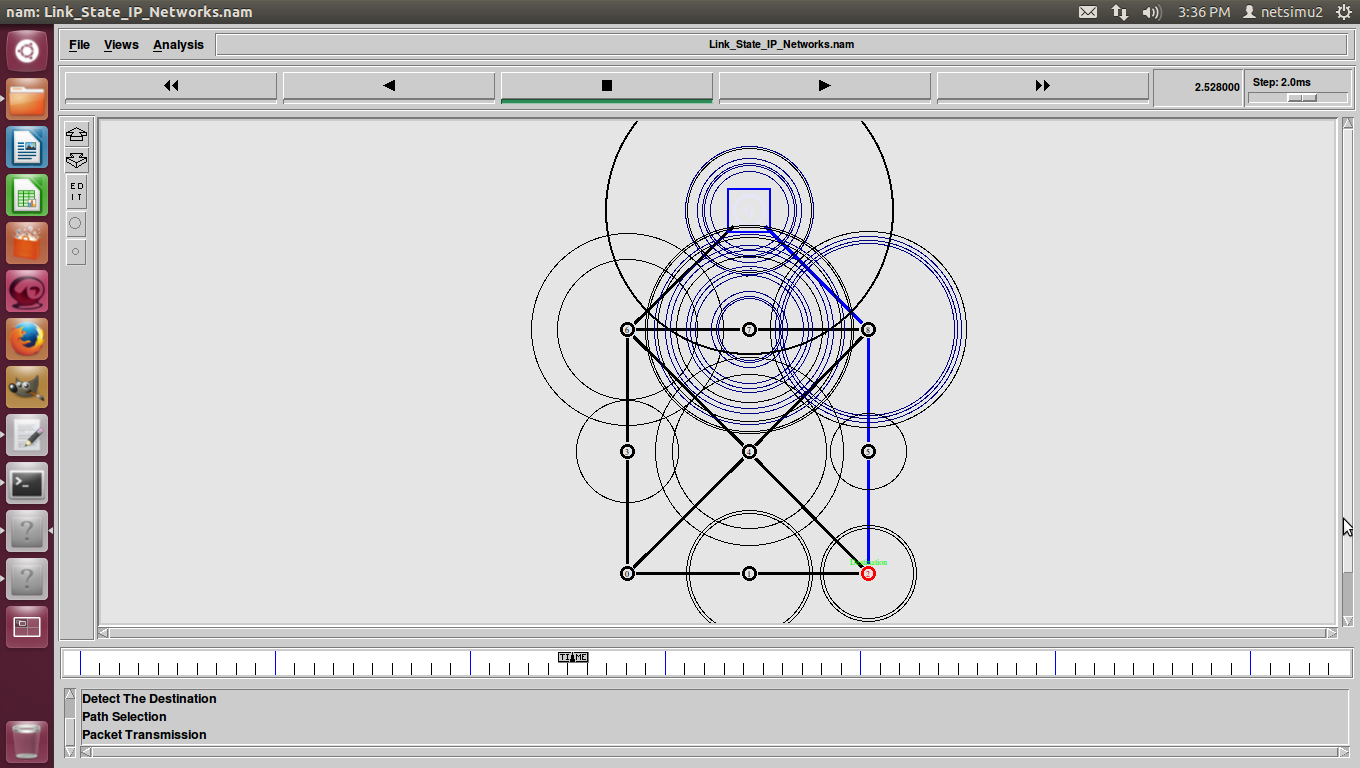

Tunable sparse network coding (TSNC) constitutes a promising concept for trading off computational complexity and delay performance. This paper advocates for the use of judicious feedback as a key not only to make TSNC practical, but also to deliver a highly consistent and controlled delay performance to end devices. We propose and analyze a TSNC design that can be incorporated into both unicast and multicast data flows.

An implementation of our approach is carried out in C++ and compared to random linear network coding (RLNC) and sparse versions of RLNC implemented in the fastest network coding library to date. Our measurements show that the processing speed of our TSNC mechanism can be increased by four-fold compared to an optimized RLNC implementation and with a minimal penalty on delay performance. Finally, we show that even a limited number of feedback packets can result in a radical improvement of the complexity-delay trade-off.